Reflections on Entrust's Distrust

What happened, why now, and what does this mean for the future of WebPKI

On June 27th, 2024 Ryan Dickson of the Chrome Root Program announced that certificates issued by Entrust will no longer be trusted by the Chrome Root Program after October 31st, 2024.

This distrust event marks a momentous occasion for WebPKI. Entrust has historically played an important role in public trust. The distrust action by the Chrome Root Program may come as a surprise to many who haven't followed the nitty-gritty details of Entrust's dysfunctional relationship with its role as a public certificate authority.

Entrust’s importance stems not from the number of certificates they issue, but rather for who they issue. While they don’t even show up with their own line-item on Merkle Town, their subscribers are typically Enterprise scale businesses (such as Governments, Airlines, Banks, Major Companies). Effectively, Subscribers that have revenues in the billions of dollars.

It’s important to note that at the time of writing, Entrust has only been distrusted by the Chrome Root Program. This distrust does not extend to the other root programs (yet).

Let’s first clarify what didn’t happen: Entrust did not have an explosive incident where it became immediately obvious to everyone that they can no longer be trusted. Instead, Entrust was distrusted due to a culmination of:

Broken promises

Technical and managerial debt

Systemic failure in understanding the requirements of being a CA

A mentality of being “too big to fail”

Historically weak enforcement by the community and root programs

How does the WebPKI model work anyway?

Before we can analyze why and how Entrust failed in its duty as a CA, we need to discuss how the WebPKI model works and how enforcement is carried out. I don’t intend this to be a history lesson, so I’ll be focusing on how it works today (as of the time of writing this piece). If you're already familiar with this process, feel free to skip to the next section.

First lets list the various important communities, forums, and mailing lists:

CCADB: The “Common CA Database”. A repository of information operated by some root programs, and effectively all relevant CAs. CA participation in the CCADB repository is mandatory.

CA/Browser Forum: A coordination platform for setting the general rules of being a Certificate Authority. Members are effectively all publicly trusted CAs, many root programs, and interested third parties. This body makes most of the rules regarding CAs.

Bugzilla: The Mozilla bug tracking software. In this context it’s specifically some categories in Bugzilla that’s related to Mozilla’s handling of their root program. CA incidents are tracked here.

MDSP mailing list: The Mozilla “dev-security-policy” mailing list. A home mainly used for discussions about interpretation of the rules and Mozilla’s update on how they operate the root store to the community. It also ends up acting as a catch-all for CA related business.

CCADB mailing list: Similar to the MDSP, but generally less activity. More “formal” discussions take place here.

CA/Browser Forum Mailing Lists: These mailing lists generally contain discussions of currently proposed rules. Voting on proposals also happen in these mailing lists.

Generally speaking, every “root store” has a set of rules for a CA to follow before that CA's roots are accepted into the store. A “root store” is just a fancy term for anything that 1) establishes and validates TLS sessions 2) has limitation on which Certificate Authorities can be used for those TLS sessions.

For example, if curl decided to operate a root store, they could do so. Curl could add whatever rules it wants for the CAs it would accept in TLS sessions made with curl. However, most software that could be a root program instead defer the decision on “what roots to trust” to the user, or the operating system, or something in between. Effectively, these applications delegate the responsibility of being a root store to another entity.

As you can imagine, with this model there’s going to be quite a bit of root stores, with their own set of rules. Some root stores are going to matter more than others. Some root stores may just be a formality.

These root stores have a need to coordinate with each other, and with the CAs. So they’ve generally agreed on mostly similar rules between each other in the form of the Baseline Requirements, and other documents that comes out of the CA/Browser Forum. This means that a CA can effectively get into most of the root programs by following one set of baseline rules, and then some additional per-root-store rules.

These rules for CAs are extensive. The set requirements all the from the software lifecycle and patching policy, down to the operating systems used for these servers, and all way down to the physical security of the servers that’s responsible for certificate issuance. Root programs enforce these rules through a couple avenues:

Self-reporting of compliance incidents

Audits from qualified auditors

Automated monitoring

Community and stakeholder monitoring

As you can imagine, with how many rules there are for these CAs, and how often these rules change - there’s always a chance for a CA to be in non-compliance. The Mozilla Root Program specifically requires that any failure against these rules MUST be reported by the rules provided by the CCADB.

To simplify: any deviance from the rules MUST be reported in Bugzilla.

How did Entrust fail?

As stated earlier, Entrust did not have one big explosive incident. The current focus on Entrust started with this incident. On its surface, this incident was a simple misunderstanding. This incident happened because up until the SC-62v2 ballot, the cpsUri field in the certificate policy extension was allowed to appear on certificates. This ballot changed the rules and made this field be considered “not recommended”.

However, this ballot only changed the baseline requirements and did not make any stipulation on how Extended Validation certificates must be. The EVGs still contained rules requiring the cPSuri extension.

When a CA has an incident like this, the response is simple:

Stop misissuance immediately.

Fix the certificate profile so you can resume issuance.

In parallel, figure out how you ended up missing this rule and what the root cause of missing this rule was.

Revoke the misissued certificates within 120 hours of learning about the incident.

Provide action items that a reasonable person would read and agree that these actions would prevent an incident like this happening again.

When I asked Entrust if they’ve stopped issuances yet, they said they haven’t, and they don’t plan to stop issuance. This is where Entrust decided to go from an accidental incident to willful misissuance. This distinction is an important one. Entrust had started knowingly misissuing certificates. Entrust received a lot of push-back from the community over this. This is a line that a CA shouldn’t, under any circumstances, cross. Entrust continued to simply not give a shit even after Ben Wilson of the Mozilla Root Program chimed in and said that what Entrust is doing is not acceptable. Entrust only started taking action after Ryan Dickson of the Chrome Root Program also chimed in to say this is unacceptable.

Entrust's delayed response to the initial incident, spanning over a week, compounded the problem by creating a secondary “failure to revoke on time” incident. As these issues unfolded, a flurry of questions arose from the community. Entrust's responses were often evasive or minimal, further exacerbating the situation. This pattern of behavior proved increasingly frustrating, prompting me to delve deeper into Entrust's past performance and prior commitments.

In one of my earlier posts, I found that Entrust had made the promise that:

We will not the make the decision not to revoke.

We will plan to revoke within the 24 hours or 5 days as applicable for the incident.

We will provide notice to our customers of our obligations to revoke and recommend action within 24 hours or 5 days based on the BR requirements.

This pattern of behavior led to a troubling cycle: Entrust making promises, breaking them, then making new promises only to break those as well.

As this unfolded, Entrust and the community uncovered an alarming number of operational mistakes, culminating in a record 18 incidents within just four months. Notably, about half of these incidents involved Entrust offering various excuses for failing to meet the 120-hour certificate revocation deadline—a requirement they had ironically voted to implement themselves.

I do want to highlight that the number of incidents is not necessarily an indication of CA quality. The worst CA is the CA that has no incidents, as its generally indicative of that they’re either not self-reporting, or not even aware that they’re misissuing.

Due to the sheer number of incidents, and Entrust’s poor responses up until this point, Mozilla asks Entrust to provide a detailed report of these recent incidents. Mozilla specifically asks Entrust to provide information regarding:

The factors and root causes that lead to the initial incidents, highlighting commonalities among the incidents and any systemic failures;

Entrust’s initial incident handling and decision-making in response to these incidents, including any internal policies or protocols used by Entrust to guide their response and an evaluation of whether their decisions and overall response complied with Entrust’s policies, their practice statement, and the requirements of the Mozilla Root Program;

A detailed timeline of the remediation process and an apportionment of delays to root causes; and

An evaluation of how these recent issues compare to the historical issues referenced above and Entrust’s compliance with its previously stated commitments.

Mozilla also asked that the proposals meet the following requirements:

Clear and concrete steps that Entrust proposes to take to address the root causes of these incidents and delayed remediation;

Measurable and objective criteria for Mozilla and the community to evaluate Entrust’s progress in deploying these solutions; and

A timeline for which Entrust will commit to meeting these criteria.

Mozilla gave Entrust a one month deadline to complete this report.

Mozilla's email served a dual purpose: it was both a warning to Entrust and an olive branch, offering a path back to proper compliance. This presented Entrust with a significant opportunity. They could have used this moment to demonstrate to the world their understanding that CA rules are crucial for maintaining internet security and safety, and that adhering to these rules is a fundamental responsibility. Moreover, Entrust could have seized this chance to address the community, explaining any misunderstandings in the initial assessment of these incidents and outlining a concrete plan to avoid future revocation delays.

Unfortunately, Entrust totally dropped the ball on this one. Their first report was a rehash of what was already on Bugzilla, offering nothing new. Unsurprisingly, this prompted a flood of questions from the community. Entrust's response? They decided to take another crack at it with a second report. They submitted this new report a full two weeks after the original deadline.

In their second report, Entrust significantly changed their tone, adopting a much more apologetic stance regarding the incidents. However, this shift in rhetoric wasn't matched by their actions. While expressing regret, Entrust was still:

Overlooking certain incidents

Delaying revocations of existing misissuances

Failing to provide concrete plans to prevent future delayed revocations

An analysis of these 18 incidents and Entrust's responses serves as a prime example of mishandled public communications during a crisis.

The consensus among many community members is that Entrust will always prioritize their certificate subscribers over their obligations as a Certificate Authority. This practice fundamentally undermines internet security for everyone. Left unchecked, it creates a dangerous financial incentive for other CAs to ignore rules when convenient, simply to avoid the uncomfortable task of explaining to subscribers why their certificates need replacement. Naturally, customers prefer CAs that won't disrupt their operations during a certificate's lifetime. However, for CAs that properly adhere to the rules, this is an impossible guarantee to make.

Furthermore, these incidents were not new to Entrust. As I’ve covered in earlier posts, Entrust has continuously demonstrated that they’re unable to complete a mass revocation event in the 120 hours as defined by the baseline requirements. This pattern of behavior suggests a systemic issue rather than isolated incidents.

Weak Enforcement by Root Programs

Despite there being over a dozen root programs, there’s practically only four that are existentially important for a Certificate Authority:

Mozilla Root Program: Used by Firefox and practically all Linux distribution and FOSS software.

Chrome Root Program: Used by Chrome (the Browser and the OS)

, and some Androids.Correction: Chrome Root Program is not used by Android devices as far as I know. NSS (The Android source code) uses the Mozilla Root Program for its root store. Note that each Android-flavored OS (e.g. Samsung) may use their own root store.

Apple Root Program: Used by everything Apple.

Microsoft Root Program: Used by everything Microsoft.

Enforcement for operational rules of a CA has struggled in the past. How can a root program properly enforce rules when it’s limited itself to effectively one method on enforcement: The binary decision of Trust or Distrust.

I’ve covered some ideas on how enforcement can improve:

Up until this distrust event, root programs have essentially been playing a game of “promise me you'll do better” with CAs, hoping this would solve operational problems. This approach has had mixed results. Some CAs, like Sectigo and Google Trust Services, managed to significantly improve their operations. Entrust, however, is a different story. In their case, promises have proven to be empty words.

Among these root programs, one has effectively derelicted its duty in the ecosystem: The Microsoft Root Program. This program has taken a back seat in fulfilling its responsibilities as a root authority. Such lack of care and failure to meet its obligations may not be entirely surprising, given recent news coming out of Microsoft. It is hoped that this distrust event will serve as a wake-up call for Microsoft to start properly participating in the ecosystem.

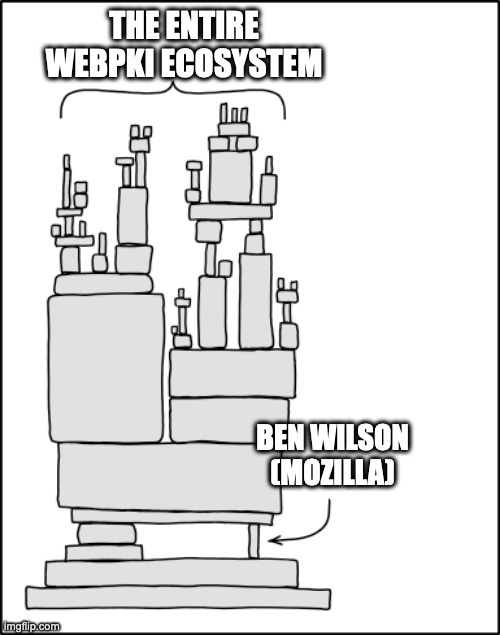

A significant portion of the root program side of WebPKI is handled by Mozilla. The Mozilla Root Program is the most important root program of the WebPKI due to its enforcement of much of the public disclosure requirements for CAs. Mozilla provides the majority of the resources, infrastructure, and triaging of CA incidents. Despite its significance, the program faces challenges:

Many CAs and stakeholders mistakenly assume the Mozilla Root Program only applies to Firefox

This misconception limits Mozilla's ability to enforce rules through distrust actions

Unilateral action by Mozilla against a misbehaving CA could potentially harm Mozilla itself

Such actions might not achieve the desired goals due to the limited perceived scope of the program

This situation highlights the strange dynamics within the WebPKI ecosystem and the disproportionate responsibility shouldered by Mozilla in maintaining its integrity.

For example, if The Linux Foundation were to more formally delegate their root program operations to Mozilla, it would give Mozilla much more capability to enforce rules for these CAs. Furthermore, if Microsoft chooses to continue stepping back from their duties, they could consider making a statement that they will honor the trust/distrust decisions made by Mozilla. Beyond that, Microsoft could financially support Mozilla to help them effectively staff their CA program. This support would be crucial in strengthening Mozilla's role in the WebPKI ecosystem.

While on this topic, it's worth noting that the Apple Root Program, like the Chrome Root Program, requires Certificate Transparency. However, Apple doesn't operate any Certificate Transparency Log Servers to help distribute the load from the few existing CT servers. If the Apple Root Program is going to mandate Signed Certificate Timestamps (SCTs) in certificates, the least they could do is operate a few of these servers themselves to help spread the load across the ecosystem.

WebPKI is changing

This distrust event is possibly a turning point for WebPKI. If the Root Programs clearly communicate that they won't tolerate repeated incidents of operational failures, revocation delays, and other violations of the Baseline Requirements going forward, many CAs will likely realize the importance of investing in their operations to meet their obligations.

Furthermore, this distrust event has shown the community that no CA is "too big to fail." All CAs must follow the rules, and when they fail to do so, they need to demonstrate a clear path of improvement to prevent future repetitions. This shift helps root programs move from constantly playing catch-up and being on the defensive, to focusing on future developments such as shorter certificate lifetimes, post-quantum cryptography, and automated certificate issuance.

The increased emphasis on enforcement becomes even more critical as post-quantum cryptography may necessitate fundamental changes in WebPKI to ensure we can all safely establish TLS sessions in the future.

I especially expect to see a significant decrease in “delayed revocation” incidents, since most of those incidents are effectively CAs making made up excuses for not revoking on time. Now those CAs know that this behavior will not be tolerated.

Looking Ahead

One of the simplest methods CAs can make their work significantly easier is requiring their subscribers to use automation and certificate lifecycle management. Certificate lifecycle management also includes figuring out that your certificate is about to be/has been revoked, and quickly replacing it. Fortunately, we have ACME, and ARI for handling the certificate lifecycle management.

Beyond that, many of the incidents we’ve seen in the past few months have been limited to Organization and Extended validated certificate. I think it’s very reasonable to ask why are we still bothering with these certificates? They’re significantly harder to automate and they don’t really provide any extra assurances on any popular user agent.

Some commentary I’ve seen about this distrust is “Why does it matter if the Country in a certificate is written as ‘uS’ or ‘us’ instead of ‘US’?” I actually agree with you! However, EV and OV certificates do require those fields, and to prevent a regression to the mean, we need to ensure that those values are properly represented. The way to avoid this mess is to switch to DV certificates.

As we move forward, the WebPKI ecosystem has an opportunity to evolve. We should strive for:

More robust and nuanced enforcement mechanisms beyond binary trust/distrust decisions.

Stronger involvement in the community by the root programs (looking at you, Microsoft).

A shift towards automation and simpler certificate types to reduce human error, improve security, and encourage automation.

Increased transparency and accountability from CAs, with clear consequences for repeated failures.

because Linux/embedded 'trust store' is just a single or a bunch of PEM files and unable to attach additional conditions, they doesn't/can't use Mozilla's trust store fully: for example if a root was distrusted notbefore date after 2024-01-01 because ca-certificates doesn't have that information this root will be fully trusted until 398 days later and when Mozilla stop publishing about that certificate and removed from list.